WEDNESDAY, 18 JANUARY 2012

What is intelligence? We all think we know, yet this question continues to challenge psychologists, neuroscientists and sociologists in equal measure. To provide an answer, we might suggest that intelligence is related to the quantity that IQ tests measure. However, as we shall see, this simple suggestion throws up paradoxes and all sorts of other problems.Attempts to quantify intelligence began long before the birth of experimental psychology and the establishment of modern methods for probing the brain. Throughout the 19th century many scientists thought that Craniometry held the answer. They believed that that the shape and size of the brain could be used to measure intelligence, and so set about collecting and measuring hundreds of skulls. Paul Broca was a key proponent of this view and invented a number of measuring instruments and methods for estimating intelligence. A great deal of Broca’s work, and that of his contemporaries, was devoted to confirming racial stereotypes. When they were proved wrong they often blamed their scientific theories, rather than retracting their racist views.

The precursor of our modern IQ tests was pioneered by French psychologist Alfred Binet, who only intended it to be used in education. Commissioned by the French government, the purpose of the Binet test was to identify students that may struggle in school so that special attention could be paid to their development. With some help from his colleague Theodore Simon, Binet made several early revisions to his test. The pair selected items that seemed to predict success at school without relying on concepts or facts that might actually be taught to the children. Then Binet had a spark of inspiration: he came up with the idea of standardising his scale. By comparing the score of individuals with the average abilities of children in particular age groups, the Binet-Simon test could assign each child a mental age.

Although Binet stressed the limitations of his scale for mass testing, his idea was soon taken up by those with more expansive testing ambitions. Lewis Terman, from Stanford University, published a further, refined version in 1916 and named it the Stanford-Binet Intelligence Scale, versions of which are still used today. This is now standardised across the whole population and its output was named the Intelligence Quotient according to William Stern’s suggestion. After a somewhat protracted delivery, IQ was born.

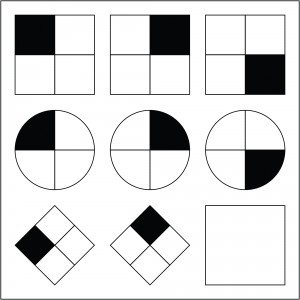

Two of the most commonly used IQ tests are the revised Wechsler Intelligence Scale for Adults and the Ravens Matrices. Despite having very little in common in their procedure, there is a strong correlation between performances on these two tests. The fact that the variance in one test can therefore be used to explain a large degree of the variance in the other suggests that they are in part measuring a common quantity. Yet, after taking each test, an individual is given two different IQ scores. How, then, can any individual IQ test be used to derive a measure of a person’s intelligence?

Charles Spearman thought that this difficulty could be overcome by accessing the underlying property that he believed was driving the correlations. Spearman postulated the existence of a single general intelligence factor, ‘g’, and attempted to uncover it by developing a statistical technique, called factor analysis, to compare correlations. Thus, within a group of IQ tests, the one that correlates best with all the others is taken to provide the best measure of ‘g’. The Ravens Matrices are usually considered the best correlate with ‘g’ and are therefore said to carry a “high ‘g’ loading”.

Yet ‘g’ has been known to wander, with its precise numerical definition varying depending on which IQ tests are included in the group. In other words, intelligence is a quality defined by our conception of it. The so-called Flynn paradox throws up an even more serious challenge for ‘g’. Average IQ scores have crept gradually upwards over the course of history. However, backward projection implies an average turn-of-the-century IQ of around 60, making the majority of our ancestors ‘mentally retarded’ by today’s standards. This is clearly nonsensical and seriously hinders any attempt to link IQ score to genetics—the genetic makeup of individuals has surely not changed substantially over the course of a few generations.

Additional backlash has come from those who prefer to focus on the differences rather than the correlations between different tests for IQ. Visual mapping of numerous tests according to the strength of their correlations reveals an interesting pattern. Rather than being dispersed evenly, the tests form clusters. Louis Thurstone used this phenomenon to suggest that maybe the thing we call intelligence is a composite of multiple different intelligences. Howard Gardner has taken this a step further by suggesting that the concept of intelligence should be extended to include a wide range of capabilities including musical, spatial and intrapersonal ability.

The murky waters of intelligence testing are clouded further by controversies relating to differences in average performance between genders and social groups. It is known that a large number of items on intelligence tests are culturally loaded, such that certain members of society have an unfair advantage.

However, many psychologists are still convinced that real differences between populations exist. Philip Kitcher has suggested that because it is such a controversial topic, research into gender or racial differences in IQ should not be undertaken. Yet, intelligence is such an important and interesting subject that few have heeded this suggestion. Even if our understanding is still far from perfect, we have come a long way from the days of relying on measurements of head size and brain weight. The enigma of intelligence is surely worth probing further.

We are now entering the quagmire that is the genetics of intelligence; an area of study with an almost 150-year history that has been filled with conflict. The founding father of the heritability of intelligence was Sir Francis Galton, an alumnus of Trinity College, Cambridge, who made a formidable contribution to a wide variety of areas including genetics, statistics, meteorology and anthropology. Greatly influenced by his half cousin, Charles Darwin, who looked for variation between related species, Galton began studying intra-species variation and humans were his species of choice. By studying the obituaries of the prominent men of Europe in The Times, Galton hypothesised that “human mental abilities and personality traits, no less than the plant and animal traits described by Darwin, were essentially inherited”.

Galton coined two of the most infamous phrases in the heritability of genetics debate: ‘nature versus nurture’ and ‘eugenics’. The word eugenics stems from the Greek for ‘good birth’ and Galton believed that in order to improve the human race those of high rank should be encouraged to should marry one another. The early part of the 20th century saw the most devastating effect of delving into the heritability of intelligence, as the eugenics movement grew and resulted in mass genocide. To this day, the field remains highly controversial, and the so-called ‘genius gene’ still remains elusive.

Galton, on the other hand, spent a large portion of his life working on the ‘nature versus nurture’ argument, and, with the backing of his behavioural studies in twins, he came down strongly on the side of nature. Twin studies have become the standard method to estimate the heritability of IQ and consist of comparisons between twins that were separated at birth (adoption studies), or between genetically identical and genetically dissimilar twins raised together. The results are very clear, with identical twins being significantly more similar in terms of intelligence than their non-identical counterparts. This similarity persists regardless of whether or not the twins are raised together and has been reinforced again and again over many decades. Estimates of the heritability of IQ are between 0.5 and 0.8, implying that up to as much as 80 per cent of intelligence is predetermined in our genetic make-up.

However, heritability is a complex issue, plagued by a number of common misconceptions. The observed IQ of an offspring is determined by the combined effects of genotype (genes held by offspring) and environmental factors. Twin studies state that for IQ, the genotype makes up the larger partner in this balance. However, our genotype is not just a combination of the genes we inherit from our parents, but also includes how those genes interact—like a cake is not just the combination of flour, eggs, butter and sugar but a whole new entity. This is true for all complex traits; and makes determining the causative genes very difficult.

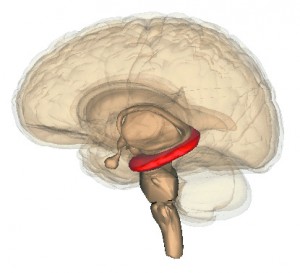

There have been a number of genome-wide association studies in humans to try to determine the genes which are responsible for intelligence but they have proved quite unfruitful. These studies rely on huge populations to find reproducible associations which aren’t due to chance. Furthermore, perhaps due to the controversial nature of the topic, studies have failed to receive the necessary funding compared to disease-related studies. In fact, the strongest genetic contenders have also been found in other cognition-related studies, such as APOE, a gene which is a risk factor for Alzheimer disease and COMT, which is implicated in schizophrenia. This backs up suggestions that these diseases are related to intelligence. Michael Meaney of McGill University, Montréal, argues for the other side of the heritability debate saying that, “there are no genetic factors that can be studied independently of the environment”. Meaney suggests that there is no gene which isn’t influenced by its environment and this is part of a new wave of thinking about heritability of IQ. A group in Oxford demonstrated the influence of the environment exquisitely in a recently published study. Using MRI imaging, they could see changes in specific parts of the brain which correlated with changes in scores on IQ tests in a group of teenagers over a four year period. They saw growth in areas of the brain responsible for verbal communication or hand dexterity in teenagers whose test scores increased over the years. Even more amazingly, in those whose score either stayed the same or went down they saw no changes in these parts of the brain.

This suggests that our genes are more of a starting line for our potential intellect, and that our intelligence can be drastically altered by our environment. Height provides an easy illustrative tool. Over the past century, we have seen a huge increase in average height. This is due to improvements in nutrition, not because everyone’s height genes suddenly kicked in at the same time. The same is true for intelligence and if one works hard and practices at something this environment will lead to improvements in intelligence. London cab drivers were part of a study which showed that by having to memorise the street names of London to get their licence they actually increased the size of the hippocampus, a part of the brain needed for memory.

Where did we get this ability to improve our intelligence from? How did our particular species, Homo sapiens, become intelligent enough to develop technology that allows us to dominate the globe? Why, of all species, should it have been us that ended up so clever?

Many of the cognitive abilities that we think of as ours, such as intelligence, are actually not unique to us at all but are shared with many other species. Crows and ravens are capable of solving complex problems; jays and squirrels can remember the location of thousands of food caches for months on end; even the humble octopus uses shells as tools. Yet the capabilities of these animals seem to be surpassed by those of chimpanzees and other great apes. They show insight learning, meaning that they can solve a novel problem on the first attempt without any trial and error; they use a wide variety of tools, including spears for hunting small mammals; and they engage in deception of others. One of Jane Goodall’s chimps, Beethoven, was able to use tactical deception to mate with females despite the alpha male, Wilkie, being present. Beethoven was able to provoke Wilkie through insubordination within the group and then, when Wilkie was occupied with reasserting his authority through dominance displays, Beethoven would sneak to the back of the group and mate with the females there. Many primatologists have claimed that this sort of deception lies at the heart of understanding human cognition because to be able to lie to someone you have to have a theory of mind. You have to be able to place yourself inside the mind of others and to understand that they are likely to react in the same way as you would in that situation. So can a chimp really put itself in another chimp’s shoes?

We know very little about what it is that makes our brain so special. It certainly isn’t the largest in the animal kingdom: the sperm whale has a brain six times the size of ours. The highly intelligent corvid birds—crows, rooks, and jackdaws—have tiny brains compared to camels or walruses, two species not known for their cerebral feats. So if our brains are large for our body size but otherwise unremarkable, and many of our intelligent traits are shared with other members of the animal kingdom, then what could it have been that catapulted us into the position of being able to use our intelligence to dominate the world around us?

Spoken language is one possibility. While we can teach apes to understand English to a certain extent, they can’t physically speak—the shape of their larynx and mouth doesn’t allow it—and animals that can speak, such as parrots, don’t necessarily have any actual understanding of what they’re saying. Being able to use spoken language is a wonderful adaptation for living in social groups; it enables any of the individuals in the group to effortlessly communicate with the others a complex concept or a new idea, and then the others can contribute their own ideas or improvements just as easily. Arguably language might even have given us the ability to ‘plug in’ to other people’s brains, allowing the development of a ‘hive-mind’, where the brainpower of many people could be pooled together.

While the actual evolutionary story of intelligence might be lost in the mists of time, we may still wonder why it was us who became so brainy. Some have argued that we had to, to be able to cope with living in social groups much larger than those of chimpanzees. We needed intelligence to be able to maintain alliances, form coalitions, and engage in deception. Or could it have been for making love rather than war? Intelligence might have been an indicator of good genes and a healthy upbringing to a potential mate; after all, many cases of learning difficulties come about due to malnutrition or disease early in childhood.

Human intelligence has evolved surprisingly quickly; it has been built up over millions of years from the already impressive cognitive abilities of our ape ancestors, and accelerated massively through our use of tools and language. Now, at the dawn of the 21st century, it seems likely that we will soon see a leap of the sort that we have never seen before: for better or for worse it will not be long until we can use electronic technology to ‘improve’ our brains as we wish.

Many are already working towards creating intelligence. The idea of imbuing human capabilities to a machine has occupied artificial intelligence (AI) researchers for more than 60 years. The machines in question are generally computers, since we already know these can be programmed to simulate other machines. The best-known test we have for assessing computer intelligence is the Turing test, proposed in 1950 by Alan Turing. He suggested that if a machine could behave as intelligently as a human then it would earn the right to be considered as intelligent as a human. Whilst no computer has yet passed the Turing test, many AI researchers do not see it as an insurmountable task. Still, we might be uncomfortable about the idea that a computer could achieve human intelligence. Thus we return to the problem of how we should define intelligence.

The philosopher John Searle proposed the ‘Chinese room’ argument to challenge the standards implicit in the Turing Test. Imagine a man is locked in a room into which strings of Chinese symbols are sent. He can manipulate them according to a book of rules and produce another set of Chinese characters as the output. Such a system would pass a Turing test for understanding Chinese, but the man inside the room wouldn’t need to understand a word of Chinese.

Considering that the only evidence we have for believing that anyone or anything is intelligent is our interactions with them, we might ask whether we really know if anyone is intelligent.

The major challenge to the ‘Chinese room’ is that while the man inside does not understand Chinese, the system as a whole, including the man, the room, the lists of symbols and the instruction book does understand Chinese. After all, it is not the man by himself who answers the questions; it is the whole system. And if the system does not understand Chinese, despite being able to converse fluently in it, then who does? This goes back to the original question behind the Turing test: the only way we can judge other people’s intelligence is through our interactions with them. How can we judge a computer’s intelligence, if not in the same way?

The ‘Chinese room’ problem focuses on only one aspect of intelligence: the ability to use and understand language. Creating programs which can understand natural language patterns is one of the major focuses in AI research today with such programs being used to interpret commands in voice control applications and in search engines to allow more intelligent searching. For example, intelligent internet searches look to find the answer to a question holistically, rather than just extracting keywords from it.

However, there are many more aspects to intelligence than the use of language. We might suggest that things like the ability to apply logic, to learn from experience, to reason abstractly or to be creative are examples of intelligent behaviour. While the idea of trying to program a computer to be creative may sound implausible, one approach to this could to be to use genetic algorithms.

Starting with random snippets of code, each generation undergoes a random mating process to shuffle and combine the fragments, followed by selection for the best-performing ‘offspring’ for a particular task. The program evolves to give better and better solutions. This method can generate unexpected solutions to problems, and do so as well as an experienced programmer.

AI applications are all around us: Google Translate uses AI technology to translate complex documents; the Roomba autonomous vacuum cleaner can learn its way around a room; and anti-lock braking systems prevent skidding by interactively controlling the braking force on individual wheels. Most people, however, would not consider these systems ‘intelligent’. This is known as the AI effect—as soon as something becomes mainstream, it is not considered to be ‘true’ AI, despite having some intelligent properties. As a result, AI applications have become ubiquitous without us really noticing.

Intelligence comes in many forms and trying to describe, examine and create it is proving to be more difficult than could have been imagined. Animals continue to surprise us with their mental abilities, eroding our ideas of what makes us special. Measuring our own intelligence has come a long way from measuring head size but we still cannot decide what constitutes intelligence. Nature versus nurture rears its ugly head once more, splitting opinions and creating controversy. Our efforts to create intelligence outside the realms of nature are still far from the apocalyptic Hollywood science fiction movies and are currently limited to a slightly more intelligent vacuum cleaner. However, with our understanding of our own minds constantly improving, perhaps it won’t be long before Isaac Asimov’s robots become a reality.

Helen Gaffney is a 3rd year undergraduate in the Department of History and Philosophy of Science

Jessica Robinson is a 3rd year PhD student in the Department of Oncology

Ted Pynegar is a 3rd year undergraduate in the Department of Zoology

Liz Ing-Simmons is a 4th year undergraduate at the Cambridge Systems Biology Centre